By Tela Mathias

The key theme of day one at the AI Engineer World’s Fair was evaluations (and agents, of course), which I am up to my eyeballs in as we prepare for enterprise adoption of Phoenix Burst so the timing was apropos. As a refresher, evaluations are:

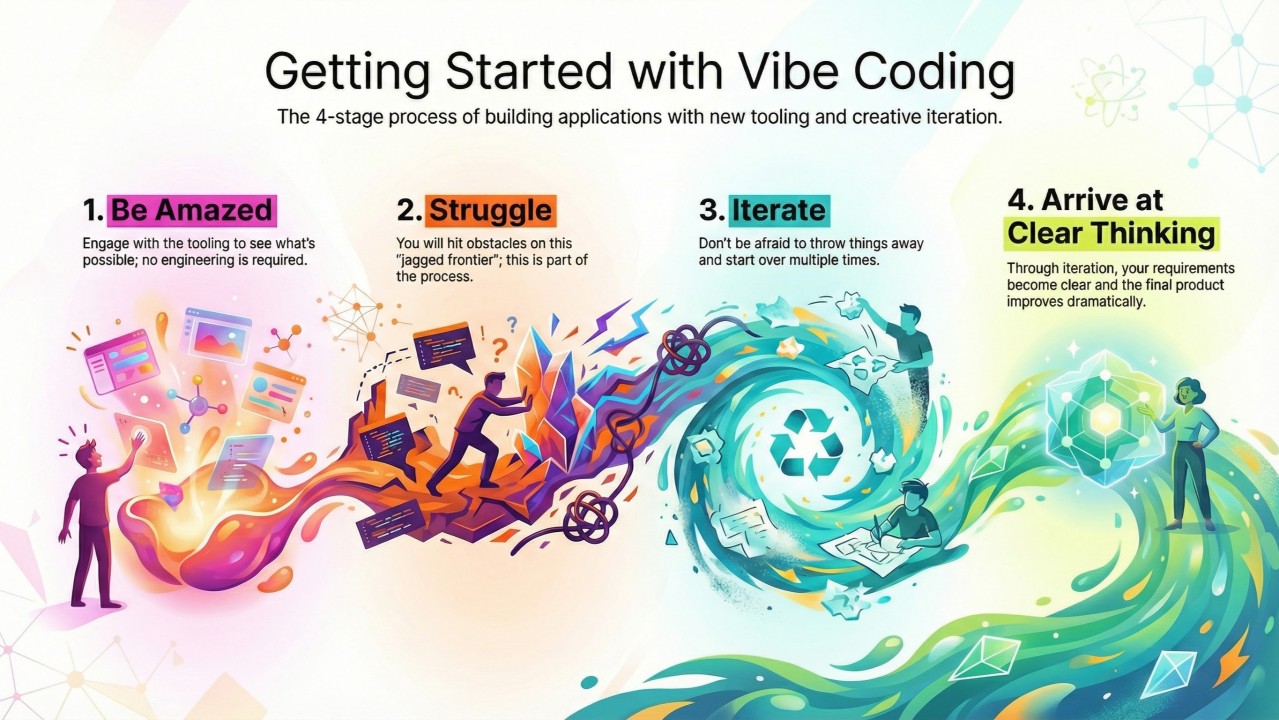

Think of evals as the way we test genAI systems to ensure they are actually good. Many of you have heard me say this before – but every genAI demo is amazing. As long as the vibe is good, you can generally have a great demo. But the real value is in the content, and if the content is not “better” then really you don’t have much. Evals are how you measure better. I’ve written before about evals as the new SLAs, and that continues to be true. It’s not real until you understand the evals.

Great session with Taylor Smith. I’m already at least an amateur when it comes to evals, certainly no Eugene Yan but I can hold my own. This 80-minute workshop included at least 45 minutes of a quasi-doomed-from-the-start hands-on activity. As usual, as soon as the Jupyter notebooks come out, I know it’s time for me to step out. But the content and presenter were great. I hadn’t though about it this way before, but she placed benchmarking within the context of the super set of model evaluations. Meaning model benchmarks are just a specialized instance of a form of evaluations.

We honed in on two major forms of evaluation – system performance and model performance, both of which are equally important. The latter is primarily focused on content, and the former is around the AI-flavored traditional system performance metrics (latency, throughput, cost, scalability). She placed these within an “evaluation pyramid”.

Tela’s advice – it’s easy to get stuck in eval purgatory, going round and round and round forever and getting nowhere. Just start. It’s easy to start (and much hard to scale) but there’s a framework. Here I am talking specifically about domain specific content evals, these are the differentiated aspects of your application – your moat.

Trust me, you need evals. Evals are the only reliable way to get to production. And we are up to our eyeballs in them. So much gratitude for Vicki Lowe Withrow and her amazing curation team. But I digress. Two great examples of why you need evals – Stable Diffusion and the infamous Google AI glue pizza incident.

Bloomberg found that “the world, according to Stable Diffusion is run by white male CEOs, women are rarely doctors, lawyers, or judges, men with dark skin commit crimes, while women with dark skin flip burgers.” Yikes. Come on guys, we can do better.

Why does this happen?

ARXIV (pronounced “archive”) is where most AI papers are published first, sometimes months before they ger peer reviewed and fully vetted. A paper published by researchers at Rice University (Professor Richard Baraniuk) called Self-Consuming Generative Models Go MAD pointed out that “our primary conclusion across all scenarios is that without enough fresh real data in each generation of an autophagous loop, future generative models are doomed to have their quality (precision) or diversity (recall) progressively decrease. We term this condition Model Autophagy disorder (MAD), making analogy to mad cow disease."

An autophagous loop (also called a self-consuming loop) is when AI models are trained on data generated by previous AI models, creating a feedback cycle where the model essentially "eats its own tail".

I randomly met Papanii Okai standing in the hallway waiting for this one to start, what a small world. I mean “of all the gin joints in all the towns in all the world…”. Was great to see a fellow industry zealot out in the wild. Jupyter notebooks also made a significant appearance at this one, which is where I made my exit. The opening was a lot of primer material on agents, but we did get into patterns for creating multimodal agents, which we have done, but I hadn’t put it together that that’s what we did.

Quick refresher – there are four main components of an AI agent: perception (how it gets information), planning and reasoning, tools (external interfaces, actions), and memory.

Multimodality refers to the ability for machine learning models to process, understand, and generate data in different forms. I didn’t realize there were different embedding models for different medium, which, of course, makes sense. The really big takeaway from this session was the emerging alternatives to typical RAG approaches to parsing, chunking, and vectorizing – which many of us know is a major pain in the ass.

There is a new type of transformer available, VLM based, where “screenshots are all you need” (see what they did there?). Preparing mixed modality data for retrieval can require data transformers, vision transformers, and possibly table to text converters. This alternative has the document snapped one page at a time and fed into the VLM. The amazing benefits of this were espoused, but the audience was skeptical and brought up many valid questions that had so-so answers. Worth looking at for sure but the silver bullet we all want in not yet out there.

Ilan Bigio was a great presenter. There was a lot of good content covered here, even though it wandered a bit at the end. The basic message that was reinforced up front is stick with prompt engineering/tuning as long as possible and until you really know that you might be able to do better – then consider fine tuning. Meaning, you have good command of your evals, you know you can do better, and you have exhausted what can be done with prompting. This was validating.

As a refresher for some of us, prompting is like a bunch of general-purpose tools that you can use to do a wide variety of things. Fine tuning is like a precision laser-guided table saw. You can do a smaller number of things incredibly well. Prompting has a low barrier, low(er) cost (relative to fine tuning), and is generally enough for most problems. Fine-tuning incurs a higher up front cots, takes longer to implement, and is good for specialized performance gains of a particular type.

Three types of fine tuning were covered – supervised fine tuning (SFT), direct preference optimization (DPO), and reinforcement fine tuning (RFT). With SFT, think about “imitation” or, DPO is “more like this and less like that”, and RFT is the epic “learn to figure it out”. I’m not fully grasping how RFT works but…wait for it… I’ll figure it out. (See what I did there?).

I had high hopes for the AWS and Anthropic event, especially because I had to effectively submit a proposal for why I should be accepted to attend this event. I figured if there was an application process, surely this would be top notch. Two highlights here, hearing directly from Anthropic about their new products and vision for the future, and, of course, finding the non-men at the event. Yes, as per usual, it’s a sea of men at these events (nothing against men), with just a light dusting of non-men. I did manage to find a few of my people.

I was not aware of what could be done with Claude Code until seeing the demo at this event, and it was powerful. It has the feel of a command line application, but I have no doubt that I will be able to use it effectively based on what I saw. I suspect it will be able to do a lot of things that the team currently does in Replit, perhaps more effectively.

Among the most interesting parts of the day was at the very end. After leaving the okish AWS/Anthropic event early, I decided to head over to the host hotel for the Mongo DB welcome reception. I met the very interesting Mark Myshatyn, who is literally “the AI guy” for Los Alamos Labs. I asked him, “So what exactly does Los Alamos Labs Do?” and he goes, “Did you see Oppenheimer? We do that.” Whoa. He’ll be speaking at the AWS Summit in DC, if you are attending you won’t want to miss it.

It was an amazing day, and we are just getting started. The people, the content, the experience – so worth it. I do these events so I can continue to find out what’s going on, and adapt what’s going on in the work I do for my mortgage clients. I intend to: